As control system professionals, it is in our interest to ensure our measurement and control systems are secure from unauthorized access. It is helpful to regard system security similarly to how we regard system safety or reliability, as these concerns share many common properties:

- Just as accidents and faults are inevitable, so are unauthorized access attempts

- Just as 100% perfect safety and 100% perfect reliability is unattainable, so is 100% security

- Digital security needs to be an important criterion in the selection and setup of industrial instrumentation equipment, just as safety and reliability are important criteria

- Maximizing security requires a security-savvy culture within the organization, just as maximizing safety requires a safety-savvy culture and maximizing reliability requires a reliability-centric design philosophy

Also similar to safety and reliability is the philosophy of defense-in-depth, which is simply the idea of having multiple layers of protection in case one or more fail. Applied to digital security, defense-in-depth means not relying on a single mode of protection (e.g. passwords only) to protect a system from attack.

Policy-based Strategies

These strategies focus on human behavior rather than system design or component selection. In some ways these strategies are the simplest to implement, as they generally require little in the way of technical expertise. This is not to suggest, however, that policy-based security strategies are therefore the easiest to implement.

On the contrary, changing human behavior is usually a very difficult feat. Policy-based strategies are not necessarily cheap, either: although little capital is generally required, operational costs will likely rise as a result of these policies.

This may take the form of monetary costs, additional staffing costs, and/or simply costs associated with impeding normal work flow (e.g. pulling personnel away from their routine tasks to do training, requiring personnel to spend more time doing things like inventing and tracking new passwords, slowing the pace of work by limiting authorization).

Foster Awareness

Ensure all personnel tasked with using and maintaining the system are fully aware of security threats, and of best practices to mitigate those threats. Given the ever-evolving nature of cyber-attacks, this process of educating personnel must be continuous.

A prime mechanism of cyber-vulnerability is the casual sharing of information between employees, and with people outside the organization. Information such as passwords and network design should be considered “privileged” and should only be shared on a need-to-know basis.

Critical security information such as passwords should never be communicated to others or stored electronically in plain (“cleartext”) format. When necessary to communicate or store such information electronically, it should be encrypted so that only authorized personnel may access it.

In addition to the ongoing education of technical personnel, it is important to keep management personnel aware of cyber threat and threat potentials, so that the necessary resources will be granted toward cyber-security efforts.

Employ Security Personnel

For any organization managing important processes and services, “important” being defined here as threatening if compromised by the right type of cyber-attack, it is imperative to employ qualified and diligent personnel tasked with the ongoing maintenance of digital security. These personnel must be capable of securing the control systems themselves and not just general data systems.

One of the routine tasks for these personnel should be evaluations of risks and vulnerabilities. This may take the form of security audits or even simulated attacks whereby the security of the system is tested with available tools.

Utilize Effective Authentication

Simply put, it is imperative to correctly identify all users accessing a system. This is what “authentication” means: correctly identifying the person (or device) attempting to use the digital system. Passwords are perhaps the most common authentication technique.

The first and foremost precaution to take with regard to authentication is to never use default (manufacturer) passwords, since these are public information. This precautionary measure may seem so obvious as to not require any elaboration, but sadly it remains a fact that too many password protected devices and systems are found operating in industry with default passwords.

Another important precaution to take with passwords is to not use the same password for all systems. The reasoning behind this precaution is rather obvious: once a malicious party gains knowledge of that one password, they have access to all systems protected by it. The scenario is analogous to using the exact same key to unlock every door in the facility: all it takes now is one copied key and suddenly intruders have access to every room.

Passwords must also be changed on a regular basis. This provides some measure of protection even after a password becomes compromised, because the old password(s) no longer function.

Passwords chosen by system users should be “strong,” meaning difficult for anyone else to guess. When attackers attempt to guess passwords, they do so in two different ways:

- Try using common words or phrases that are easy to memorize

- Try every possible combination of characters until one is found that works

The first style of password attack is called a dictionary attack, because it relies on a database of common words and phrases. The second style of password attack is called a brute force attack because it relies on a simple and tireless (“brute”) algorithm, practical only if executed by a computer.

A password resistant to dictionary-style attacks is one not based on a common word or phrase. Ideally, that password will appear to be nonsense, not resembling any discernible word or simple pattern. The only way to “crack” such a password, since a database of common words will be useless against it, will be to attempt every possible character combination (i.e. a brute-force attack).

A password resistant to brute-force-style attacks is one belonging to a huge set of possible passwords. In other words, there must be a very large number of possible passwords limited to the same alphabet and number of characters. Calculating the brute-force strength of a password is a matter of applying a simple exponential function:

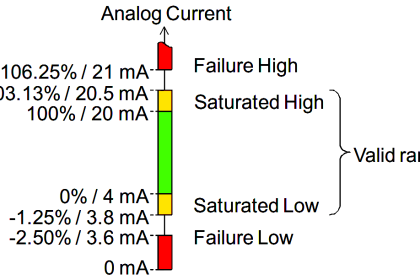

S = Cn

Where,

S = Password strength (i.e. the number of unique password combinations possible)

C = Number of available characters (i.e. the size of the alphabet)

n = Number of characters in the password

For example, a password consisting of four characters, each character being a letter of the English alphabet where lower- and upper-case characters are treated identically, would give the following strength:

S = 264 = 456976 possible password combinations

If we allowed case-sensitivity (i.e. lower- and upper-case letters treated differently), this would double the value of C and yield more possible passwords:

S = 524 = 7311616 possible password combinations

Obviously, then, passwords using larger alphabets are stronger than passwords with smaller alphabets.

Cautiously Grant Authorization

While authentication is the process of correctly identifying the user, authorization is the process of assigning rights to each user. The two concepts are obviously related, but not identical. Under any robust security policy, users are given only as much access as they need to perform their jobs efficiently.

Too much access not only increases the probability of an attacker being able to cause maximum harm, but also increases the probability that benevolent users may accidently cause harm.

Perhaps the most basic implementation of this policy is for users to log in to their respective computers using the lowest-privilege account needed for the known task(s), rather than to log in at the highest level of privilege they might need. This is a good policy for all people to adopt when they use personal computers to do any sort of task, be it work- or leisure-related.

Logging in with full (“administrator”) privileges is certainly convenient because it allows you to do anything on the system (e.g. install new software, reconfigure any service, etc.) but it also means any malware accidently engaged under that account now has the same unrestricted level of access to the system.

Habitually logging in to a computer system with a low-privilege account helps mitigate this risk, for any accidental execution of malware will be similarly limited in its power to do harm.

Another implementation of this policy is called application whitelisting, where only trusted software applications are allowed to be executed on any computer system. This stands in contrast to “blacklisting” which is the philosophy behind anti-virus software: maintaining a list of software applications known to be harmful (malware) and prohibiting the execution of those pre-identified applications.

Blacklisting (anti-virus) only protects against malware that has been identified and notified to that computer. Blacklisting cannot protect against “zero-day” malware known by no one except the attacker. In a whitelisting system, each computer is pre-loaded with a list of acceptable applications, and no other applications – benign or malicious – will be able to run on that machine.

Maintain Good Documentation

While this is important for effective maintenance in general, thorough and accurate documentation is especially important for digital security because it helps identify vulnerabilities.

Details to document include:

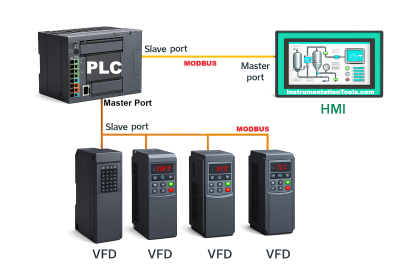

- Network diagrams

- Software version numbers

- Device addresses

Close Unnecessary Access Pathways

All access points to the critical system must be limited to those necessary for system function. This means all other potential access points in the critical system must be closed so as to minimize the total number of access points available to attackers.

Examples of access points which should be inventoried and minimized:

- Hardware communication ports (e.g. USB serial ports, Ethernet ports, wireless radio cards)

- Software TCP ports

- Shared network file storage (“network drives”)

- “Back-door” accounts used for system development

That last category deserves some further explanation. When engineers are working to develop a new system, otherwise ordinary and sensible authentication/authorizations measures become a major nuisance.

The process of software development always requires repeated logins, shutdowns, and tests forcing the user to re-authenticate themselves and negotiate security controls. It is therefore understandable when engineers create simpler, easier access routes to the system under development, to expedite their work and minimize frustration.

Such “back-door” access points become a problem when those same engineers forget (or simply neglect) to remove them after the developed system is released for others to use. An interesting example of this very point was the so-called basisk vulnerability discovered in some Siemens S7 PLC products.

A security researcher named Dillon Beresford working for NSS Labs discovered a telnet service running on certain models of Siemens S7 PLCs with a user account named “basisk” (the password for this account being the same as the user name).

All one needed to do in order to gain privileged access to the PLC’s operating system was connect to the PLC using a telnet client and enter “basisk” for the user name and “basisk” for the password! Clearly, this was a back-door account used by Siemens engineers during development of that PLC product line, but it was not closed prior to releasing the PLC for general use.

Maintain Operating System Software

All operating system software manufacturers periodically release “patches” designed to improve the performance of their products. This includes patches for discovered security flaws. Therefore, it is essential for all computers belonging to a critical system to be regularly “patched” to ensure maximum resistance to attack.

This is a significant problem within industry because so much industrial control system software is built to run on consumer-grade operating systems such as Microsoft Windows. Popular operating systems are built with maximum convenience in mind, not maximum security or even maximum reliability. New features added to an operating system for the purpose of convenient access and/or new functionality often present new vulnerabilities.

Another facet to the consumer-grade operating system problem is that these operating systems have relatively short lifespans. Driven by consumer demand for more features, software manufacturers develop new operating systems and abandon older products at a much faster rate than industrial users upgrade their control systems.

Upgrading the operating systems on computers used for an industrial control system is no small feat, because it usually means disruption of that system’s function, not only in terms of the time required to install the new software but also (potentially) re-training required for employees.

Upgrading may even be impossible in cases where the new operating system no longer supports features necessary for that control system. This would not be a problem if operating system manufacturers provided the same long-term (multi-decade) support for their products as industrial hardware manufacturers typically do, but this is not the case for consumer-grade products such as Microsoft Windows.

Routinely Archive Critical Data

The data input into and generated by digital control systems is a valuable commodity, and must be treated as such. Unlike material commodities, data is easily replicated, and this fact provides some measure of protection against loss from a cyber-attack.

Routine “back-ups” of critical data, therefore, is an essential part of any cyber-security program. It should be noted that this includes not just operational data collected by the control system during operation, but also data such as:

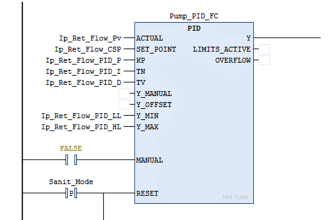

- PID tuning parameters

- Control algorithms (e.g. function block programs, configuration data, etc.)

- Network configuration parameters

- Software installation files • Software license (authorization) files

- Software drivers

- Firmware files

- User authentication files

- All system documentation (e.g. network cable diagrams, loop diagrams)

This archived data should be stored in a medium immune to cyber-attacks, such as read-only optical disks. It would be foolish, for example, to store this sort of critical data only as files on the operating drives of computers susceptible to attack along with the rest of the control system.

Create Response Plans

Just as no industrial facility would be safe without incident response plans to mitigate physical crises, no industrial facility using digital control systems is secure without response plans for cyber-attacks.

This includes such details as:

- A chain of command for leading the response

- Instructions on how to restore critical data and system functions

- Work-arounds for minimal operation while critical systems are still unavailable

Limit Mobile Device Access

Mobile digital devices such as cell phones and even portable storage media (e.g. USB “flash” drives) pose digital security risks because they may be exploited as an attack vector bypassing air gaps and firewalls. It should be noted that version 0.5 of Stuxnet was likely inserted into the Iranian control system in this manner, through an infected USB flash drive.

A robust digital security policy will limit or entirely prohibit personal electronic devices into areas where they might connect to the facility’s networks or equipment. Where mobile devices are essential for job functions, those devices should be owned by the organization and registered in such a way as to authenticate their use.

Computers should be configured to automatically reject non-registered devices such as removable flash-memory storage drives. Portable computers not owned and controlled by the organization should be completely off-limits from the process control system.

Above all, one should never underestimate the potential harm allowing uncontrolled devices to connect to critical, trusted portions of an industrial control system. The degree to which any portion of a digital system may be considered “trusted” is a function of every component of that system. Allowing connection to untrusted devices violates the confidence of that system.

Secure All Toolkits

A special security consideration for industrial control systems is the existence of software designed to create and edit controller algorithms and configurations. The type of software used to write and edit Ladder Diagram (LD) code inside of programmable logic controllers (PLCs) is a good example of this, such as the Step7 software used to program Siemens PLCs in Iran’s Natanz uranium enrichment facility.

Instrumentation professionals use such software on a regular basis to do their work, and as such it is an essential tool of the trade. However, this very same software is a weapon in the hands of an attacker, or when hijacked by malicious code.

A common practice in industry is to leave computers equipped with such “toolkit” software connected to the control network for convenience. This is a poor policy, and one that is easily remedied by simply disconnecting the programming computer from the control network immediately after downloading the edited control code. An even more secure policy is to never connect such “toolkit” computers to a network at all, but only to controllers directly, so that the toolkit software cannot be hijacked.

Another layer of defense is to utilize robust password protection on the programmable control devices when available, rather than leaving password fields blank which then permits any user of the toolkit software full access to the controller’s programming.

Close Abandoned Accounts

Given the fact that disgruntled technical employees constitute a significant security threat to organizations, it stands to reason that the user accounts of terminated employees should be closed as quickly as possible.

Not only do terminated employees possess authentication knowledge in the form of user names and passwords, but they may also possess extensive knowledge of system design and vulnerabilities.

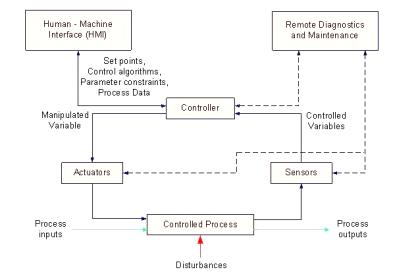

Design-based Strategies

A design-based security strategy is one rooted in technical details of system architecture and functionality. Some of these strategies are quite simple (e.g. air gaps) while others are quite complex (e.g. encryption). In either case, they are strategies ideally addressed at the inception of a new system, and at every point of system alteration or expansion.

Advanced Authentication

The authentication security provided by passwords, which is the most basic and popular form of authentication at the time of this writing, may be greatly enhanced if the system is designed to not just reject incorrect passwords, but to actively inconvenience the user for entering wrong passwords.

Password timeout systems introduce a mandatory waiting period for the user if they enter an incorrect password, typically after a couple of attempts so as to allow for innocent entry errors. Password lockout systems completely lock a user out of their digital account if they enter multiple incorrect passwords. The user’s account must then be reset by another user on that system possessing high-level privileges.

The concept behind both password timeouts and password lockouts is to greatly increase the amount of time required for any dictionary-style or brute-force password attack to be successful, and therefore deter these attacks.

Unfortunately timeouts and lockouts also present another form of system vulnerability to a denial of service attack. Someone wishing to deny access to a particular system user need only attempt to sign in as that user, using incorrect passwords. The timeout or lockout system will then delay (or outright deny) access to the legitimate user.

Authentication based on the user’s knowledge (e.g. passwords) is but one form of identification, though. Other forms of authentication exist which are based on the possession of physical items called tokens, as well as identification based on unique features of the user’s body (e.g. retinal patterns, fingerprints) called biometric authentication.

Token-based authentication requires all users to carry tokens on their person. This form of authentication so long as the token does not become stolen or copied by a malicious party.

Biometric authentication enjoys the advantage of being extremely difficult to replicate and nearly impossible to lose. The hardware required to scan fingerprints is relatively simple and inexpensive. Retinal scanners are more complex, but not beyond the reach of organizations possessing expensive digital assets. Presumably, there will even be DNA-based authentication technology available in the future.

Air Gaps

An air gap is precisely what the name implies: a physical separation between the critical system and any network, preventing communication. Although it seems so simple that it ought to be obvious, an important design question to ask is whether or not the system in question really needs to have connectivity at all.

Certainly, the more networked the system is, the easier it will be to access useful information and perform useful operational functions. However, connectivity is also a liability: that same convenience makes it easier for attackers to gain access.

While it may seem as though air gaps are the ultimate solution to digital security, this is not entirely true. A control system that never connects to a network is still vulnerable to cyber-attack, and that is through detachable programming and data-storage devices.

For example, a PLC without a permanent network connection may become compromised by way of an infected portable computer used to edit the PLC’s code. A DCS completely isolated from the facility’s local area network (LAN) may become compromised if someone plugs in an infected data storage device such as a USB “flash” memory module.

In order for air gaps to be completely effective, they must be permanent and include portable devices as well as network connections. This is where effective security policy comes into play, ensuring portable devices are not allowed into areas where they might connect (intentionally or otherwise) to critical systems.

Effective air-gapping of critical networks also necessitates physical security of the network media: ensuring attackers cannot gain access to the network cables themselves, so as to “tap” into those cables and thereby gain access. This requires careful planning of cable routes and possibly extra infrastructure (e.g. separate cable trays, conduits, and access controlled equipment rooms) to implement.

Wireless (radio) data networks pose a special problem for the “air gap” strategy, because the very purpose of radio communication is to bridge physical air gaps. A partial measure applicable to some wireless systems is to use directional antennas to link separated points together, as opposed to omnidirectional antennas which transmit and receive radio energy equally in all directions.

This complicates the task of “breaking in” to the data communication channel, although it is not 100 percent effective since no directional antenna has a perfectly focused radiation pattern, nor do directional antennas preclude the possibility of an attacker intercepting communications directly between the two antennae. Like all security measures, the purpose of using directional antennas is to make an attack less probable.

Network Segregation

This simply means to divide digital networks into separate entities (or at least into layers) in order to reduce the exposure of digital control systems to any sources of harm. Air gaps constitute an elementary form of network segregation, but are not practical when at least some data must be communicated between networks.

At the opposite end of the network segregation spectrum is a scenario where all digital devices, control systems and office computers alike, connect to the facility’s common Local Area Network (LAN). This is almost always a bad policy, as it invites a host of problems not limited to cyber-attacks but extending well beyond that to innocent mistakes and routine faults which may compromise system integrity. At the very least, control systems deserve their own dedicated network(s) on which to communicate, free of traffic from general information technology (IT) office systems.

In facilities where control system data absolutely must be shared on the general LAN, a firewall should be used to connect those two networks. Firewalls are either software or hardware entities designed to filter data passed through based on pre-set rules. In essence, each network on either side of a firewall is a “zone” of communication, while the firewall is a “conduit” between zones allowing only certain types of messages through.

A rudimentary firewall might be configured to “blacklist” any data packets carrying hyper-text transfer protocol (HTTP) messages, as a way to prevent web based access to the system. Alternatively, a firewall might be configured to “whitelist” only data packets carrying Modbus messages for a control system and block everything else.

Some specialized firewalls are manufactured specifically for industrial control systems. One such firewall is manufactured by the Tofino, and has the capability to screen data packets based on rules specific to industrial control system platforms such as popular PLC models. Industrial firewalls differ from general-purpose data firewalls in their ability to recognize control-specific data.

In systems where different communication zones must have different levels of access to the outside world, multiple firewalls may be set up in such a way as to create a so-called demilitarized zone (DMZ). A DMZ is a network existing between a pair of firewalls, one firewall filtering data to and from a protected network, and the other firewall filtering data to and from an unprotected (or less protected) network.

Any devices connected to the DMZ will have access to either network, through different firewall rule sets. Any data exchanged between the protected and unprotected networks, though, must pass through both firewalls.

Encryption

Encryption refers to the intentional scrambling of data by means of a designated code called a key, a similar (or in some cases identical) key being used to un-scramble (decrypt) that data on the receiving end.

The purpose of encryption, of course, is to foil passive attacks by making the data unintelligible to anyone but the intended recipient, and also to foil active attacks by making it impossible for an attacker’s transmitted message to be successfully received.

A popular form of encrypted communication used for general networking is a Virtual Private Network or VPN, like Privatevpn.com. This is where two computer systems use VPN software (or two VPN hardware devices) to encrypt messages sent to each other over an unsecure medium.

Since every single packet of data exchanged between the two computers is encrypted, the communication will be unintelligible to anyone else who might be “listening” on that unsecure network. In essence, VPNs create a secure “tunnel” for data to travel between points on an otherwise unprotected network.

Encryption may also be applied to dedicated, non-broadcast networks such as telephone channels and serial data communication lines. Special cryptographic modems and serial data translators are manufactured specifically for this purpose, and may be applied to legacy SCADA and control networks based on telephony or serial communication cables.

Important files stored on computer drives may also be encrypted, such that only users possessing the proper key(s) may decrypt and use the files.

It should be noted that encryption does not necessarily protect against so-called replay attacks, where the attacker records a communicated message and later re-transmits that same message to the network. For example, if a control system uses an encrypted message to command a remotely located valve to shut, an attacker might simply re-play that same message at any time in the future to force the valve to shut without having to decrypt the message.

An interesting form of encryption applicable to certain wireless (radio) data networks is spread spectrum communication. This is where all the data is not communicated over the same radio carrier frequency, but rather is divided or “spread” among a range of frequencies.

Various techniques exist for spreading digital data across a spectrum of radio frequencies, but they all comprise a form of data encryption because the spreading of that data is orchestrated by means of a cryptographic key. Perhaps the simplest spread-spectrum method to understand is frequency-hopping or channel hopping, where the transmitters and receivers both switch frequencies on a keyed schedule.

Any receiver uninformed by the same key will not “know” which channels will be used, or in what order, and therefore will be unable to intercept anything but isolated pieces of the communicated data. Spread-spectrum technology was invented during the second World War as a means for Allied forces to encrypt their radio transmissions such that Axis forces could not interpret them.

Spread-spectrum capability is built into several wireless data communication standards, including Bluetooth and WirelessHART.

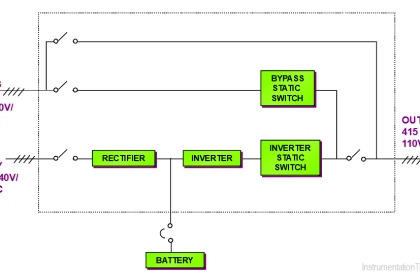

Read-only System Access

One way to thwart so-called “active” attacks (where the attacker inserts or modifies data in a digital system to achieve malicious ends) is to engineer the system in such a way that all communicated data is read-only and therefore cannot be written or edited by anyone.

This, of course, by itself will do nothing to guard against “passive” (read-only) attacks such as eavesdropping, but passive attacks are definitely the lesser of the two evils with regard to industrial control systems.

In systems where the digital data is communicated serially using protocols such as EIA/TIA-232, read-only access may be ensured by simply disconnecting one of the wires in the EIA/TIA-232 cable. By disconnecting the wire leading to the “receive data” pin of the critical system’s EIA/TIA-232 serial port, that system cannot receive external data but may only transmit data. The same is true for EIA/TIA-485 serial communications where “transmit” and “receive” connection pairs are separate.

Certain serial communication schemes are inherently simplex (i.e. one-way communication) such as EIA/TIA-422. If this is an option supported by the digital system in question, the use of that option will be an easy way to ensure remote read-only access.

For communication standards such as Ethernet which are inherently duplex (bi-directional), devices called data diodes may be installed to ensure read-only access. The term “data diode” invokes the functionality of a semiconductor rectifying diode, which allows the passage of electric current in one direction only. Instead of blocking reverse current flow, however, a “data diode” blocks reverse information flow.

The principle of read-only protection applies to computing systems as well as communication networks. Some digital systems do not strictly require on-board data collection or modification of operating parameters, and in such cases it is possible to replace read/write magnetic data drives with read-only (e.g. optical disk) drives in order to create a system that cannot be compromised.

Admittedly, applications of this strategy are limited, as there are few control systems which never store operational data nor require any editing of parameters. However, this strategy should be considered where it applies.

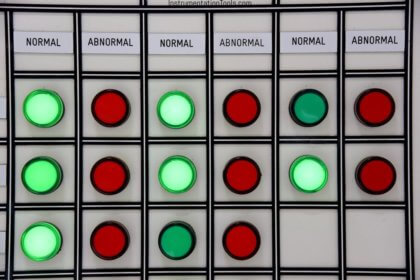

Many digital devices offer write-protection features in the form of a physical switch or key-lock preventing data editing. Just as some types of removable data drives have a “write-protect” tab or switch located on them, some “smart” field instruments also have write-protect switches inside their enclosures which may be toggled only by personnel with direct physical access to the device. Programmable Logic Controllers (PLCs) often have a front-panel write-protect switch allowing protection of the running program.

Not only do write-protect switches guard against malicious attacks, but they also help prevent innocent mistakes from causing major problems in control systems.

Consider the example of a PLC network where each PLC connected to a common data network has its own hardware write-protect switch. If a technician or engineer desires to edit the program in one of these PLCs from their remotely-located personal computer, that person must first go to the location of that PLC and disable its write protection.

While this may be seen as an inconvenience, it ensures that the PLC programmer will not mistakenly access the wrong PLC from their office-located personal computer, which is especially easy to do if the PLCs are similarly labeled on the network.

Making regular use of such features is a policy measure, but ensuring the exclusive use of equipment with this feature is a system design measure.

Control Platform Diversity

In control and safety systems utilizing redundant controller platforms, an additional measure of security is to use different models of controller in the redundant array.

For example, a redundant control or safety system using two-out-of-three voting (2oo3) between three controllers might use controllers manufactured by three different vendors, each of those controllers running different operating systems and programmed using different editing software. This mitigates against device specific attacks, since no two controllers in the array should have the exact same vulnerabilities.

A less-robust approach to process control security through diverse platforms is simply the use of effective Safety Instrumented Systems (SIS) applied to critical processes, which always employ controls different from the base-layer control system.

An SIS system is designed to bring the process to a safe (shut down) condition in the event that the regular control system is unable to maintain normal operating conditions. In order to avoid common-cause failures, the SIS must be implemented on a control platform independent from the regular control system.

The SIS might even employ analog control technology (and/or discrete relay-based control technology) in order to give it complete immunity from digital attacks.

In either case, improving security through the use of multiple, diverse control systems is another example of the defense in depth philosophy in action: building the system in such a way that no essential function depends on a single layer or single element, but rather multiple layers exist to ensure that essential function.