An instruments accuracy is limited by its precision. An instruments precision is determined by making minute variations in the measured process variable (e.g., pressure, temperature) and then monitoring the instruments output for a response.

The smallest magnitude of change an instrument can detect and then reflect at its output is its precision. This precision rating can be listed in percentage of span or in engineering units.

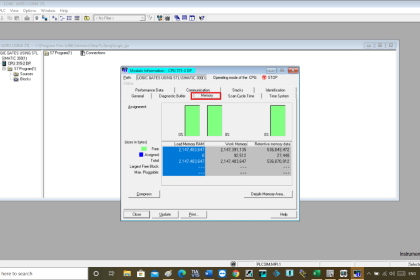

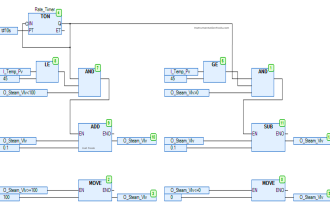

PLC Analog Input Card Resolution Effects on Accuracy

For example, a hypothetical ultrasonic tank level transmitter might have a detection range of 012 ft with its output scaled 4-20 mA, precise to ±0.1 inch.

Precision can be related to the instruments resolution. Rather than a smooth movement of the output as the tank level changes, the output will step to a new milliamp value as the level changes in 0.1 inch increments.

The transmitters resolution is

12 ft x 12 in/ft x 10 steps/in = 1440 steps across the range.

Therefore, the 420 mA signal will change in increments of 16 mA/1440 steps = .01111 mA/step.

In the digital world, bits are units of resolution. The term 12 bit resolution tells us the smallest change of signal magnitude that can be interpreted by the computer or device.

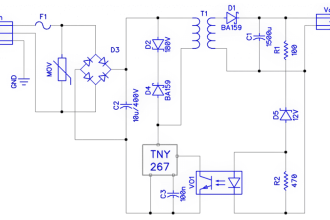

This resolution value relates to the margin of error. Since each device in a system has its own associated resolution (hence, its own margin of error), the overall accuracy of a system is decreased every time a signal is converted or re-transmitted.

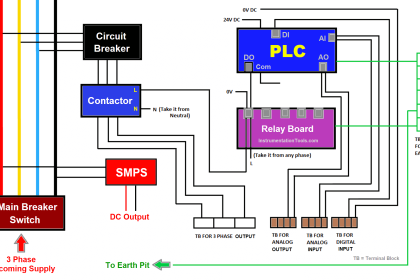

In most cases, the best move is to digitize the signal at the sensor and then transmit the value as a digitized data stream rather than as a pure electrical signal, thus eliminating several layers of conversion.

Most PLCs currently have 12-bit resolution on their analog inputs. This means the analog signal is converted to a 12-bit binary integer.

Once in integer format, the value can be converted to a decimal integer, or hexadecimal, or whatever, for display.

A 420 mA signal has a span of 16 mA (20 4 =16).

This 16 mA span might represent the 0100% output of an ultrasonic transmitter or other device.

For an ultrasonic meter with a calibrated range of 12 ft, how much error will the PLC introduce to the system?

12-bit resolution: 4096 divisions

Resolution error: 144 inches/4096 = .0352 inches

Instrument Range versus Scale

There is a difference between an instruments design range and its scale.

When an instrument is received, it comes with an inherent range. This range value defines the maximum input span for which the device can provide a linear output.

For example, an ultrasonic level transmitter might have a range of 12 ft.

If we place that transmitter in a 6-ft tank and do not recalibrate it, we will lose resolution.

Its output will only change 50% for a 100% change in tank level.

To rectify this, the unit needs to be recalibrated to provide a 0-100% change in output for a 0-50% change in its input, provided the device is scalable to that degree.

Sometimes a device is not scalable beyond, say, 10% of its range. This limit is called rangeability.

Instrument Calibration

An instruments scale and its calibrated range are synonymous. Once a scale is decided on, the instrument must be calibrated.

If its range is 0-550 psig and its scale is 10-350 psig, its calibrated span is 350 – 10 = 340 psig.

The calibration end points are the zero point and the span point.

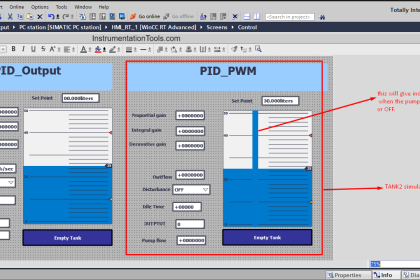

The point at which the process is expected to operate is called the set point, though this is not generally part of instrument calibration other than to confirm the anticipated set point is near the center of the calibrated span.

If you liked this article, then please subscribe to our YouTube Channel for PLC and SCADA video tutorials.

You can also follow us on Facebook and Twitter to receive daily updates.

Read Next:

Troubleshooting with Loop Calibrator

Fieldbus Transmitter Calibration

Split Range Control Application

“An instrument’s accuracy is limited by its precision” … ????!!!!!! – at the beginning of his article, the author makes a big mess from terms, confusing to each other the accuracy, resolution, precision, sensitivity, etc.

It is very useful for everybody to review from time to time the terms of JCGM 100 guide.