The most common technologies for industrial temperature measurement are electrical in nature: RTDs and thermocouples. As such, the standards used to calibrate such devices are the same standards used to calibrate electrical instruments such as digital multimeters (DMMs). For RTDs, this means a precision resistance standard such as a decade box used to precisely set known quantities of electrical resistance. For thermocouples, this means a precision potentiometer used to generate precise quantities of low DC voltage (in the millivolt range, with microvolt resolution).

Photographs of antique potentiometers used to calibrate thermocouple-sensing temperature instruments appear here: (Old Models)

Modern, electronic calibrators are also available now for RTD and thermocouple instrument calibration, capable of sourcing accurate quantities of electrical resistance and DC millivoltage for the simulation of RTD and thermocouple elements, respectively. A photograph of a Fluke model 525A laboratory standard is shown here:

Both the antique potentiometers and modern laboratory calibrators such as the Fluke 525A are self-contained sources useful for simulating the electrical outputs of temperature sensors. If you closely observe the potentiometer photos, you can see numbers engraved around the circumference of the dials, showing the user how much voltage the device output at any given setting.

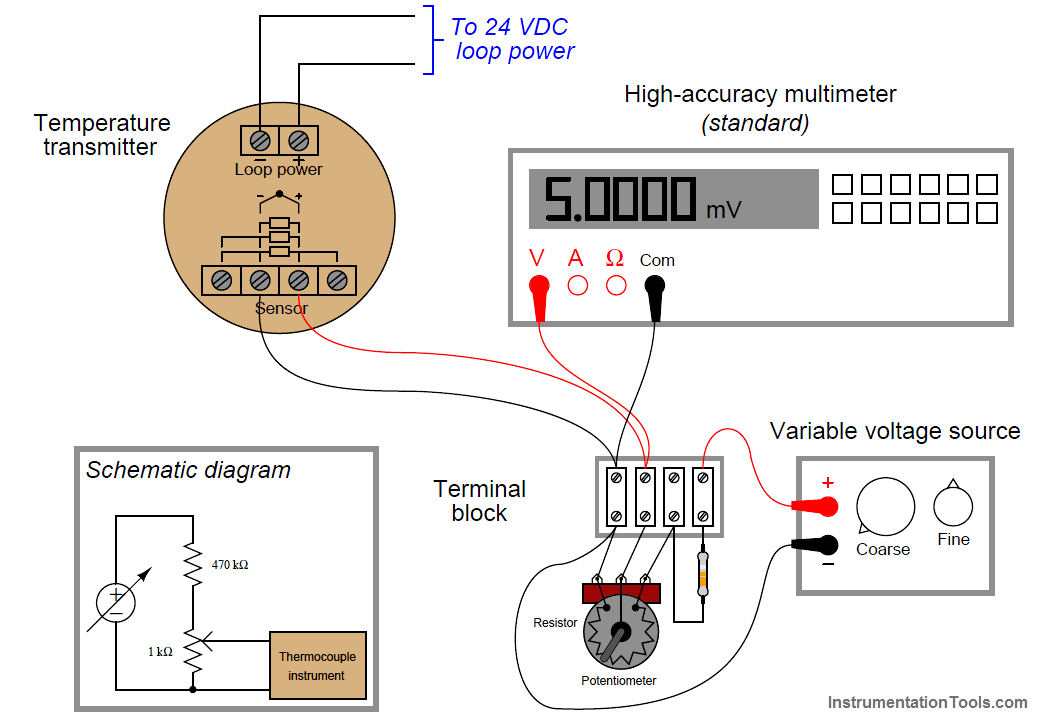

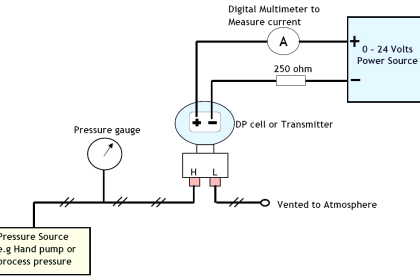

Given an accurate enough voltmeter, it is possible to construct your own calibration potentiometer for simulating the millivoltage output of a thermocouple. A simple voltage divider set up to reduce the DC voltage of an ordinary variable-voltage power supply will suffice, so long as it provides fine enough adjustment:

Unlike the potentiometers of old, providing direct read-out of millivoltage at the potentiometer dial(s), we rely here on the accuracy of the precision multimeter to tell us when we have reached the required millivoltage with our power supply and voltage divider circuit. This means the high accuracy multimeter functions as the calibration standard in this set-up, permitting the use of non-precision components in the rest of the circuit. Since the multimeter’s indication is the only variable being trusted as accurate when calibrating the thermocouple-input temperature transmitter, the multimeter is the only (Note) component in the circuit affecting the uncertainty of our calibration.

Note : This, of course, assumes the potentiometer has a sufficiently fine adjustment capability that we may adjust the millivoltage signal to any desired precision. If we were forced to use a coarse potentiometer – incapable of being adjusted to the precise amount of millivoltage we desired – then the accuracy of our calibration would also be limited by our inability to precisely control the applied voltage.

Electrically simulating the output of a thermocouple or RTD may suffice when the instrument we wish to calibrate uses a thermocouple or an RTD as its sensing element. However, there are some temperature-measuring instruments that are not electrical in nature: this category includes bimetallic thermometers, filled-bulb temperature systems, and optical pyrometers. In order to calibrate these types of instruments, we must accurately create the calibration temperatures in the instrument shop. In other words, the instrument to be calibrated must be subjected to an actual temperature of accurately known value.

Even with RTDs and thermocouples – where the sensor signal may be easily simulated using electronic test equipment – there is merit in using an actual source of precise temperature to calibrate the temperature instrument. Simulating the voltage produced by a thermocouple at a precise temperature, for example, is fine for calibrating the instrument normally receiving the millivoltage signal from the thermocouple, but this calibration test does nothing to validate the accuracy of the thermocouple element itself! The best type of calibration for any temperature measuring instrument, from the perspective of overall integrity, is to actually subject the sensing element to a precisely known temperature. For this we need special calibration equipment designed to produce accurate temperature samples on demand.

A time-honored standard for low-temperature industrial calibrations is pure water, specifically the freezing and boiling points of water. Pure water at sea level (full atmospheric pressure) freezes at 32 degrees Fahrenheit (0 degrees Celsius) and boils at 212 degrees Fahrenheit (100 degrees Celsius). In fact, the Celsius temperature scale is defined by these two points of phase change for water at sea level.

To use water as a temperature calibration standard, simply prepare a vessel for one of two conditions: thermal equilibrium at freezing or thermal equilibrium at boiling. “Thermal equilibrium” in this context simply means equal temperature throughout the mixed-phase sample. In the case of freezing, this means a well-mixed sample of solid ice and liquid water. In the case of boiling, this means a pot of water at a steady boil (vaporous steam and liquid water in direct contact). What you are trying to achieve here is ample contact between the two phases (either solid and liquid; or liquid and vapor) to eliminate hot or cold spots.

When the entire water sample is homogeneous in temperature and heterogeneous in phase (i.e. a mix of different phases), the sample will have only one degree of thermodynamic freedom: its temperature is an exclusive function of atmospheric pressure. Since atmospheric pressure is relatively stable and well-known, this fixes the temperature at a constant value.

For ultra-precise temperature calibrations in laboratories, the triple point of water is used as the reference. When water is brought to its triple point (i.e. all three phases of solid, liquid, and gas co-existing in direct contact with each other), the sample will have zero degrees of thermodynamic freedom, which means both its temperature and its pressure will become locked at stable values: pressure at 0.006 atmospheres, and temperature at 0.01 degrees Celsius.

The major limitation of water as a temperature calibration standard is it only provides two points of calibration: 0 degC and 100 degC, with the latter being strongly pressure-dependent. If other reference temperatures are required for a calibration, some substance other than water must be used. A variety of substances with known phase-change points have been standardized as fixed points on the International Practical Temperature Scale (ITS-90).

The following list is a sample of some of these substances and their respective phase states and temperatures:

- Neon (triple point) = −248.6 deg C

- Oxygen (triple point) = −218.8 deg C

- Mercury (triple point) = −38.83 deg C

- Tin (freezing point) = 231.93 deg C

- Zinc (freezing point) = 419.53 deg C

- Aluminum (freezing point) = 660.32 deg C

- Copper (freezing point) = 1084.62 deg C

Substances at the triple point must be in thermal equilibrium with solid, liquid, and vaporous phases co-existing. Substances at the freezing point must be a two-phase mixture of solid and liquid (i.e. a liquid in the process of freezing, neither a completely liquid nor a completely solid sample). The physical principle at work in all of these examples is that of latent heat : the thermal energy exchange required to change the phase of a substance.

So long as the minimum heat exchange requirement for complete phase change is not met, a substance in the midst of phase transition will exhibit a fixed temperature, and therefore behave as a temperature standard. Small amounts of heat gain or loss to such a sample will merely change the proportion of one phase to another (e.g. how much solid versus how much liquid), but the temperature will remain locked at a constant value until the sample becomes a single phase.

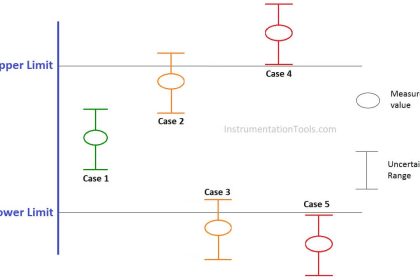

One major disadvantage of using phase changes to produce accurate temperatures in the shop is the limited availability of temperatures. If you need to create some other temperature for calibration purposes, you either need to find a suitable material with a phase change happening at that exact same temperature (good luck!) or you need to find a finely adjustable temperature source and use an accurate thermometer to compare your instrument under test against. The latter scenario is analogous to the use of a high-accuracy voltmeter and an adjustable voltage source to calibrate a voltage instrument: comparing one instrument (trusted to be accurate) against another (under test).

Laboratory-grade thermometers are relatively easy to secure. Variable temperature sources suitable for calibration use include oil bath and sand bath calibrators. These devices are exactly what they sound like: small pots filled with either oil or sand, containing an electric heating element and a temperature control system using a laboratory-grade (NIST-traceable) thermal sensor. In the case of sand baths, a small amount of compressed air is introduced at the bottom of the vessel to “fluidize” the sand so the grains move around much like the molecules of a liquid, helping the system reach thermal equilibrium. To use a bath-type calibrator, place the temperature instrument to be calibrated such the sensing element dips into the bath, then wait for the bath to reach the desired temperature.

An oil bath temperature calibrator is shown in the following photograph, with sockets to accept seven temperature probes into the heated oil reservoir:

This particular oil bath unit has no built-in indication of temperature suitable for use as the calibration standard. A standard-grade thermometer or other temperature-sensing element must be inserted into the oil bath along with the sensor under test in order to provide a reference indication useful for calibration.

Dry-block temperature calibrators also exist for creating accurate calibration temperatures in the instrument shop environment. Instead of a fluid (or fluidized powder) bath as the thermal medium, these devices use metal blocks with blind (dead-end) holes drilled for the insertion of temperature sensing instruments.

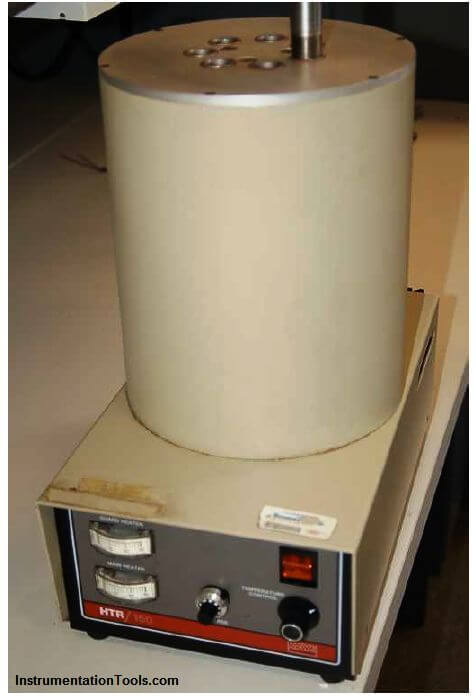

An inexpensive dry-block temperature calibrator intended for bench-top service is shown in this photograph:

This particular dry-block temperature calibrator does provide direct visual indication of the block temperature by means of a digital display on its front panel. If greater accuracy is desired, a laboratory reference-grade temperature sensor may be inserted into the same block along with the sensor being tested, and that reference-grade sensor relied upon as the temperature standard rather than the dry-block calibrator’s digital display.

Optical temperature instruments require a different sort of calibration tool: one that emits radiation equivalent to that of the process object at certain specified temperatures. This type of calibration tool is called a blackbody calibrator (Note), having a target area where the optical instrument may be aimed. Like oil and sand bath calibrators, a blackbody calibrator relies on an internal temperature sensing element as a reference, to control the optical emissions of the blackbody target at any specified temperature within a practical range.

Note : A “black body” is an idealized object having an emissivity value of exactly one (1). In other words, a black body is a perfect radiator of thermal energy. Interestingly, a blind hole drilled into any object at sufficient depth acts as a black body, and is sometimes referred to as a cavity radiator.

you do the best, thank you