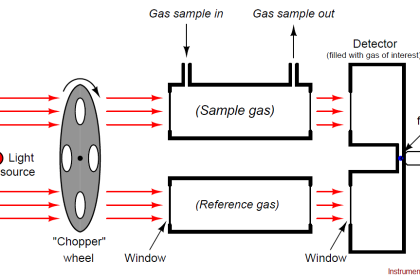

The turbidity method is based upon a comparison of intensity of light scattered by a sample under defined conditions with the intensity of light scattered by a standard reference suspension. A turbidimeter is a nephelometer with a visible light source for illuminating the sample and one or more photo-electric detectors placed ninety degrees to the path of the light source. Note: the below calibration procedure is for a turbidimeter which the sample is placed into a cuvette.

Some instruments will only accept one standard. For those instruments, the second, third, etc., standards will serve as check points.

Calibration Procedure:

1. Allow the calibration standards to equilibrate at the ambient temperature. The use of commercially available polymer primary standards (AMCO-AEPA-l) is preferred; however, the standards can be prepared using Formazin (read the warning on the label before use) according to the EPA analytical Method 180.1. Other standards may be used if they can be shown that they are equivalent to the previously mentioned standards.

2. If the standard cuvette is not sealed, rinse a cuvette with deionized water. Shake the cuvette to remove as much water as possible. Do not wipe dry the inside of the cuvette because lint from the wipe may remain in the cuvette. Add the standard to the cuvette.

3. Before performing the calibration procedure, make sure the cuvettes are not scratched and the outside surfaces are dry and free from fingerprints and dust. If the cuvette is scratched or dirty, discard or clean the cuvette respectively. Note: some . manufacturers require the cuvette to be orientated in the instrument in a particular direction for accurate reading.

4. Select a low value standard such as a zero or 0.02 NTU and calibrate according to manufacturer’s instructions. Note: a zero standard (approximately 0 NTU) can be prepared by passing distilled water through a 0.45 micron pore size membrane filter.

5. Select a high standard and calibrate according to manufacturer’s instructions or verify the calibration if instrument will not accept a second standard. In verifying, the instrument should read the standard value to within the specifications of the instrument. If the instrument has range of scales, check each range that will be used during the sampling event with a standard that falls within that range.

6. Record the calibration information on calibration log sheet.