As previously defined, calibration refers to the checking and adjustment of an instrument so that its output faithfully corresponds to its input throughout a specified range.

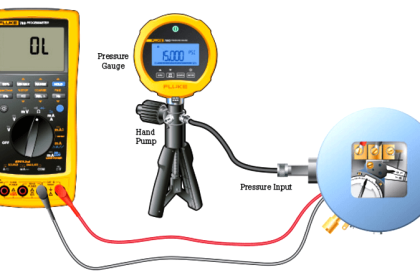

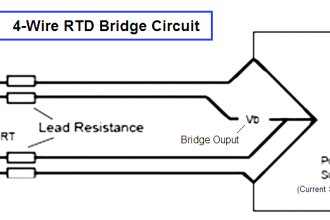

In order to calibrate an instrument, we must have some means of knowing the input and/or output quantities associated with the instrument under test.

A substance or device used as a reference to compare against an instrument’s response is called a calibration standard. Simply put, a calibration standard is something we may compare the calibrated instrument to. Thus, any calibration can only be as good as the standard used.

Calibration standards fall into two broad categories: standards used to produce accurate physical quantities (e.g. pressure, temperature, voltage, current, etc.), and standards used to simply measure physical quantities to a high degree of accuracy.

An example of the former would be the use of boiling water (at sea level) to produce a temperature of 100 degrees Celsius (212 degrees Fahrenheit) in order to calibrate a temperature gauge, whereas an example of the latter would be the use of a laboratory-quality precision thermometer to measure some arbitrary source of temperature in comparison to the temperature gauge being calibrated.

In metrology labs, the ultimate standards are based on fundamental constants of nature, and are called intrinsic standards. A modern example of an intrinsic standard for time is the so-called atomic clock, using isolated atoms of Cesium to produce frequencies which are inherently fixed and reproduceable world-wide.

Instrument shops located in industrial facilities cannot afford the capital and consumable costs associated with intrinsic standards, and so must rely on other devices for their calibration purposes.

Ideally, there should be a “chain” of calibration from any device used as a shop standard traceable all the way back to some intrinsic standard in a national-level or primary metrology lab.

Calibration standards used in instrument shops for industrial calibration work should therefore be periodically sent to a local metrology lab for re-standardization, where their accuracy may be checked against other (higher-level) standards which themselves are checked against even higher level calibration standards, ultimately traceable all the way to intrinsic standards. In each step of the calibration “chain,” there is a progressive degree of measurement uncertainty.

Intrinsic standards possess the least amount of uncertainty, while field instruments (e.g. pressure transmitters, temperature gauges, etc.) exhibit the greatest uncertainties.

It is important that the degree of uncertainty in the accuracy of a test instrument is significantly less than the degree of uncertainty we hope to achieve in the instruments we calibrate.

Otherwise, calibration becomes a pointless exercise. This ratio of uncertainties is called the Test Uncertainty Ratio, or TUR.

A good rule-of-thumb is to maintain a TUR of at least 4:1 (ideally 10:1 or better), the test equipment being many times more accurate (less uncertain) than the field instruments we calibrate with them.

I have personally witnessed the confusion and wasted time that results from trying to calibrate a field instrument to a tighter tolerance than what the calibration standard is capable of.

In one case, an instrument technician attempted to calibrate a pneumatic pressure transmitter to a tolerance of ± 0.25% of span using a test gauge that was only good for ± 1% of the same span.

This poor technician kept going back and forth, adjusting the transmitter’s zero and span screws over and over again in a futile attempt to reign in the transmitter’s response within the stated specification of ± 0.25%.

After giving up, he tested the test gauges by comparing three of them at once, tied together on a common air pressure tube. When he did this, it became clear that no two test gauges would consistently agree with each other within the specified tolerance over the 3 to 15 PSI range.

As he raised and lowered the pressure, the gauges’ indications would deviate from one another far more than ± 0.25% across the measurement range.

Simply put, the inherent uncertainty of the gauges exceeded the uncertainty he was trying to calibrate the transmitter to.

As a result, his calibration “standard” was in fact shifting on him as he performed the calibration. His actions were analogous to trying to set up a fixed-position cannon to repeatedly hit a moving target.

The lesson to be learned here is to always ensure the standards used to calibrate industrial instruments are reliably accurate (enough).

No calibration standard is perfect, but perfection is not what we need. Our goal is to be accurate enough that the final calibration will be reliable within specified boundaries.