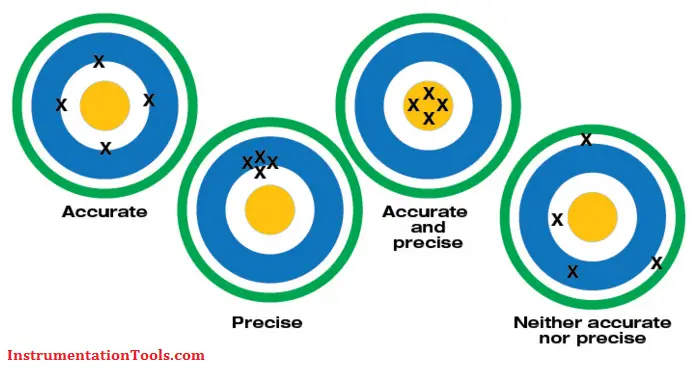

Accuracy: An instrument’s degree of veracity—how close its measurement comes to the actual or reference value of the signal being measured.

Resolution: The smallest increment an instrument can detect and display—hundredths, thousandths, millionths.

Range: The upper and lower limits an instrument can measure a value or signal such as amps, volts and ohms.

Precision: An instrument’s degree of repeatability—how reliably it can reproduce the same measurement over and over.

Accuracy:

Accuracy refers to the largest allowable error that occurs under specific operating conditions

Accuracy is expressed as a percentage and indicates how close the displayed measurement is to the actual (standard) value of the signal measured. Accuracy requires a comparison to an accepted industry standard.

The accuracy of a specific digital multimeter is more or less important depending on the application. For example, most AC power line voltages vary ±5% or more. An example of this variation is a voltage measurement taken at a standard 115 V AC receptacle. If a digital multimeter is only used to check if a receptacle is energized, a DMM with a ±3% measurement accuracy is appropriate.

Some applications, such as calibration of automotive, medical aviation or specialized industrial equipment, may require higher accuracy. A reading of 100.0 V on a DMM with an accuracy of ±2% can range from 98.0 V to 102.0 V. This may be fine for some applications, but unacceptable for sensitive electronic equipment.

Accuracy may also include a specified amount of digits (counts) added to the basic accuracy rating. For example, an accuracy of ±(2%+2) means that a reading of 100.0 V on the multimeter can be from 97.8 V to 102.2 V. Use of a DMM with higher accuracy allows a great number of applications.

Resolution

Resolution is the smallest increment a tool can detect and display.

For a nonelectrical example, consider two rulers. One marked in 1/16-inch segments offers greater resolution than one marked in quarter-inch segments.

Imagine a simple test of a 1.5 V household battery. If a digital multimeter (DMM) has a resolution of 1 mV on the 3 V range, it is possible to see a change of 1 mV while reading 1 V. The user could see changes as small as one one-thousandth of a volt, or 0.001.

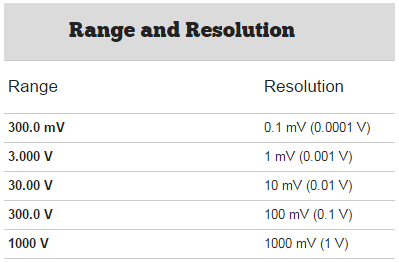

Resolution may be listed in a meter’s specifications as maximum resolution, which is the smallest value that can be discerned on the meter’s lowest range setting.

For example, a maximum resolution of 100 mV (0.1 V) means that when the multimeter’s range is set to measure the highest possible voltage, the voltage will be displayed to the nearest tenth of a volt.

Resolution is improved by reducing the DMM’s range setting as long as the measurement is within the set range.

Range

Digital multimeter range and resolution are related and are sometimes specified in a DMM’s specs.

Many multimeters offer an autorange function that automatically selects the appropriate range for the magnitude of the measurement being made. This provides both a meaningful reading and the best resolution of a measurement.

If the measurement is higher than the set range, the multimeter will display OL (overload). The most accurate measurement is obtained at the lowest possible range setting without overloading the multimeter.

Note: Here we are discussing the above topic with respect to multimeter

Source : Fluke

Also Read: Basics of PLC Systems

I want more subject pls send me sir. Thank you

yes

good comment